Will AI be the end of us?

Home | Articles | Technology | Will AI be the end of us?

Image by PikiSuperstar on Freepik

Geoffrey Hinton, 75, often referred to as the 'godfather' of AI, quit Google in May 2023 so that he could be free to warn of the dangers of AI. Dangers which include a flood of misinformation, job losses and an 'existential risk' (human extinction) posed by the creation of true digital intelligence.

Hinton in 2013

Eviatar Bach, CC BY-SA 3.0 via Wikimedia Commons

In interviews with the New York Times and the BBC he said that he regretted his contribution to AI. He was recruited by Google a decade ago to help develop the company’s AI technology and the approach he pioneered led the way for current systems such as ChatGPT.

He said that until last year he believed Google had been a 'proper steward' of the technology, but that changed once Microsoft started incorporating a chatbot into its Bing search engine and then Google started being concerned about the risk to its search business.

Hinton told the BBC that some of the dangers of AI chatbots were “quite scary”, warning they could become more intelligent than humans and could be exploited by 'bad actors'. “It’s able to produce lots of text automatically so you can get lots of very effective spambots. It will allow authoritarian leaders to manipulate their electorates, things like that.”

But, he added, he was also concerned about the “existential risk of what happens when these things get more intelligent than us.

“I’ve come to the conclusion that the kind of intelligence we’re developing is very different from the intelligence we have,” he said. “So it’s as if you had 10,000 people and whenever one person learned something, everybody automatically knew it. And that’s how these chatbots can know so much more than any one person.”

Geoffrey Hinton is not the only person to warn technology could pose serious harm to humanity. In April 2023, Elon Musk said he had fallen out with the Google co-founder Larry Page because Page was “not taking AI safety seriously enough”. Musk told Fox News that Page wanted “digital super-intelligence... as soon as possible”.

On 16 May 2023, Sam Altman the CEO of OpenAI and creator of ChatGPT testified to a US Senate judiciary committee. He said that while he believes the benefits of the tools deployed so far vastly outweigh the risks, the regulation of AI is essential to mitigate the risks of increasingly powerful models.

When did chatbots first appear?

You may be surprised to learn how long 'chatterbots' - or 'chatbots' as they are now known' - have been around. One of the earliest of these natural language processing (NLP) programs was Eliza, a classic chatbot program that was developed in the 1960s by Joseph Weizenbaum, a computer scientist at MIT. It was designed to simulate a psychotherapist by engaging in conversations with users and asking questions about their problems. I have developed a web app version of 'Eliza - your personal therapist' which you can install on Android or Apple phones and tablets.

Will AI become more intelligent than us?

The point at which AI becomes more intelligent than us is known as a 'singularity' - a term first used by Vernor Vignor in his 1993 paper: The Coming Technological Singularity: How to Survive in the Post-Human Era.

The term 'singularity' is also used in cosmology meaning a point in space-time where the laws of physics break down. It is a point of infinite density and zero volume. An example of this is the centre of a 'Black Hole'.

What is intelligence and does AI have the same kind of intelligence as us? Geoffrey Hinton thinks that AI intelligence is very different from ours. In my opinion, AI is more about collecting, organising and distributing data (a knowledge-based intelligence). If I'm right, then 'singularity' has already arrived! But, is knowledge and data management the same as intelligence?

AI is very powerful and - if used by the wrong people ('bad actors') - it can cause a lot of damage.

Conclusion

In my lifetime, I have seen several apocalyptic predictions:

- Nuclear annihilations

- Aliens

- UFOs

- Robots

- Climate change

- AI

Nuclear annihilations

With the nuclear threat, we need to have the sense not to 'press the button'.

UFOs and Aliens

There has been no proof that UFOs and Aliens exist.

Robots and AI

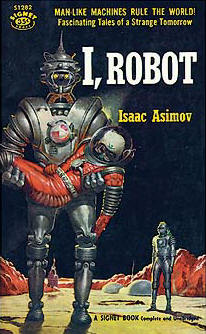

Robots and AI can be switched off and have built-in security measures. Isaac Asimov introduced the "Three Laws of Robotics" in his 1942 short story Runaround which was included in the 1950 "I, Robot" collection.

Source: Wikipedia

The three laws are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

A 'Zeroth Law' was also introduced: "A robot may not harm humanity, or, by inaction, allow humanity to come to harm."

Climate change is the most likely to lead to our extinction - it the most difficult to stop - but it can be done.

It is not the tools, weapons and environments we have created that will destroy humanity - it is us!

Also see:

Should Google be concerned about ChatGPT?

Become a member or supporter to get early access to new articles.

Support the Learning Pages project | ☕️ Buy me a coffee